Some doctors are now treating big language modelling like a treasure, thinking it could be the diagnostic tool of the future! It's kind of like giving doctors an intelligent assistant to help them see patients faster and more accurately. Three researchers, who look like detectives, conducted an in-depth investigation of ChatGPT as a "suspect". They found that ChatGPT, although smart, but not yet capable of diagnostic tools "big job". However, they also put forward an interesting point of view, that is, more "training", may be able to make ChatGPT better.

They feel that although the ability of ChatGPT in clinical diagnosis remains to be seen, it can be a very useful "assistant". It's like a little helper that can do your chores and help you with ideas at the same time. When it comes to writing research reports and generating medical recommendations, ChatGPT can be a great assistant for doctors and patients, helping them organize their thoughts and providing inspiration. So don't underestimate this "suspect", although it can't completely replace the doctor now, maybe shortly, it can become the "super assistant" in your life!

How convenient it would be to have a machine that can diagnose conditions as accurately as a doctor! However, a recent study tells us that ChatGPT, a large language model, still has a lot of "stumbling blocks" on its way to becoming a paediatrician. Like a child learning to walk, ChatGPT needs more "practice" and "guidance" to become a qualified paediatrician. Although it has strong language processing capabilities, it seems to be a bit "frazzled" when it comes to complex medical conditions. The study found that ChatGPT's accuracy in diagnosing pediatric diseases could be improved. Sometimes, it gives recommendations that don't match the actual condition, which can make both doctors and patients sweat. It seems that to make ChatGPT become a real "doctor", it needs to do more optimization and improvement in technology and algorithm.

Pediatric diagnosis, as simple as it sounds, is a battle of wits with the "elusive" little patients! In the face of those innocent little faces, doctors not only have to capture key information from their words but also have to distinguish between what is true and what is the child's childish words. A child's medical history can be a maze. Parents say their child has pain or an itch, but the doctor has to be like Sherlock Holmes to find out the real cause of the disease from these clues. The child's ability to express himself is limited, and sometimes, because he is afraid of the treatment or is having too much fun, he tends to speak in a "foggy" way. This tests the doctor's patience and observation skills, and he or she has to act like a detective to find out the truth. Pediatric diagnosis also has a uniquely high demand on the doctor's professional ability. A child's body is very different from an adult's, and the disease develops rapidly, so treatment must be extra careful.

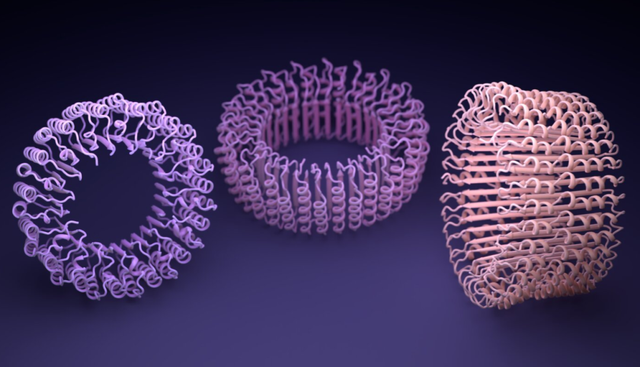

The Big Language Model is, in fact, a talking supercomputer. By learning a large amount of linguistic data, we become smarter and smarter, understanding what we say and also giving some useful suggestions. Imagine if you have a headache, this assistant can give you some preliminary diagnosis based on your symptoms, how convenient! However, don't think that this assistant is omnipotent. After all, medicine is a very serious field, and can not be a little sloppy. Although this assistant can help doctors make a diagnosis faster, it can't replace a real doctor yet. Moreover, seeing a doctor requires communication and trust between the doctor and the patient, which can't be replaced by a machine.

Unlike "small models" that specialize in a specific domain, big language models show a wide range of comprehension capabilities and can engage in more natural conversations with humans. At the same time, due to the large amount of data used by the big language model involving a variety of professional knowledge, they also show a surprising depth in the discussion of professional topics, often able to make reasonable professional recommendations.

However, the researchers also point out its shortcomings. They believe that with more selective training, ChatGPT might be able to improve the results of the test and become more reliable. So what can ChatGPT do in the future? Don't forget, although it can't replace the doctor's diagnosis yet, it can already provide a lot of help to doctors and patients. It can be a powerful assistant for doctors to write research reports and generate medical recommendations, helping doctors and patients to obtain information faster and more accurately.